Week Learning Objectives

By the end of this module, you will be able to

- Describe, conceptually, what likelihood function and maximum likelihood estimation are

- Describe the differences between maximum likelihood and restricted maximum likelihood

- Conduct statistical tests for fixed effects, and use the small-sample correction when needed

- Use the likelihood ratio test to test random slopes

- Estimate multilevel models with the Bayesian/Markov Chain Monte Carlo estimator in the

brms package

Task List

- Review the resources (lecture videos and slides)

- Complete the assigned readings

- Snijders & Bosker ch 4.7, 6, 12.1, 12.2

- Attend the Tuesday session and participate in the class exercise

- (Optional) Fill out the early/mid-semester feedback survey on Blackboard

Lecture

Slides

PDF version

Estimation methods

The values you obtained from MLM software (e.g.,

lme4) are

Likelihood function

In statistics, likelihood is the probability of

Which of the following is possible as a value for log-likelihood?

Estimation methods for MLM

Likelihood ratio test (LRT) for fixed effects

The LRT has been used widely across many statistical methods, so it’s helpful to get familiar with doing it by hand (as it may not be available in all software in all procedures).

| |

Model 1 |

Model 2 |

| (Intercept) |

12.650 |

12.662 |

| |

(0.148) |

(0.148) |

| meanses |

5.863 |

3.674 |

| |

(0.359) |

(0.375) |

| ses |

|

2.191 |

| |

|

(0.109) |

| SD (Intercept id) |

1.610 |

1.627 |

| SD (Observations) |

6.258 |

6.084 |

| Num.Obs. |

7185 |

7185 |

| R2 Marg. |

0.123 |

0.167 |

| R2 Cond. |

0.178 |

0.223 |

| AIC |

46969.3 |

46578.6 |

| BIC |

46996.8 |

46613.0 |

| ICC |

0.06 |

0.07 |

| RMSE |

6.21 |

6.03 |

Using R and the pchisq() function, what are the (or X2) test statistic and the value for the fixed effect coefficient for ses?

test with small-sample correction

From the results below, what are the test statistic and the value for the fixed effect coefficient for meanses?

| | | | | |

|---|

| meanses | 324.3935 | 324.3935 | 1 | 15.5060 | |

| ses | 1874.3379 | 1874.3379 | 1 | 669.0317 | |

<form name="form_38879" onsubmit="return validate_form_38879()" method="post"><input type="radio" name="answer_38879" id="38879_1" value="F(1) = 57.53, p < .001"><label for="1">F(1) = 57.53, p < .001</label><br><input type="radio" name="answer_38879" id="38879_2" value="X2 = 324.39, df = 15.51, p = .006"><label for="2">X2 = 324.39, df = 15.51, p = .006</label><br><input type="radio" name="answer_38879" id="38879_3" value="F(1, 15.51) = 9.96, p = .006"><label for="3">F(1, 15.51) = 9.96, p = .006</label><br><input type="submit" value="check"></form><p id="result_38879"></p><script> function validate_form_38879() {var x, text; var x = document.forms["form_38879"]["answer_38879"].value;if (x == "F(1, 15.51) = 9.96, p = .006"){text = 'Correct 👍';} else {text = 'That is not correct. Rewatch the video if needed';} document.getElementById('result_38879').innerHTML = text; return false;} </script>

For more information on REML and K-R, check out

- McNeish, D. (2017). Small sample methods for multilevel modeling: A colloquial elucidation of REML and the Kenward-Roger correction.

LRT for random slope variance

When testing whether the variance of a random slope term is zero, what needs to be done?

What does MCMC stand for?

Which distribution is used to make statistical conclusions for Bayesian parameter estimation?

Check your learning: Using R, verify that, if and for a normally distributed population, the probability (joint density) of getting students with scores of 23, 16, 5, 14, 7.

Check your learning: Using the principle of maximum likelihood, the best estimate for a parameter is one that

Using the principle of maximum likelihood, the best estimate for a parameter is one that

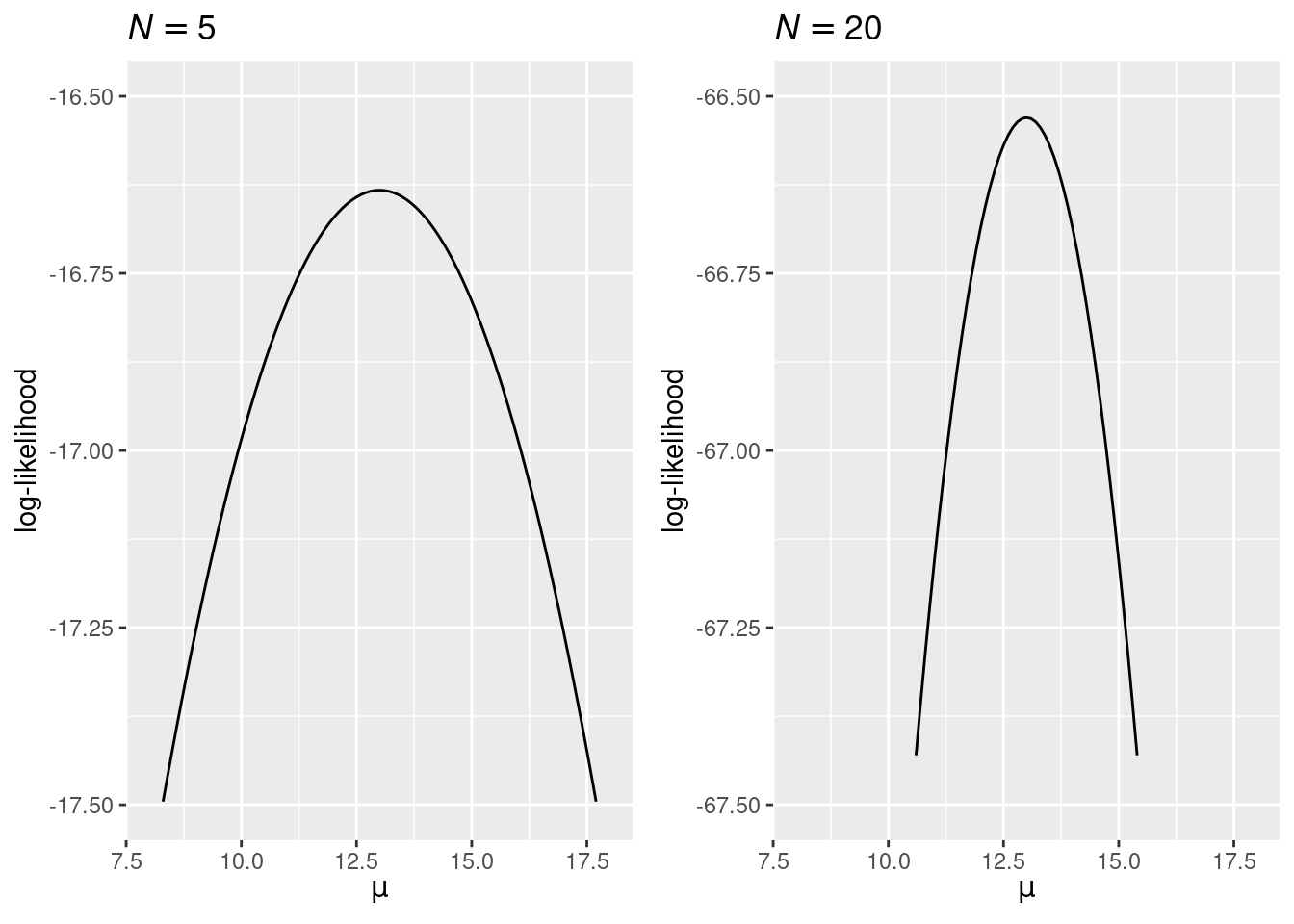

Because a probability is less than 1, the logarithm of it will be a negative number. By that logic, if the log-likelihood is -16.5 with , what should it be with a larger sample size (e.g., )?

More about maximum likelihood estimation

If is not known, the maximum likelihood estimate is which uses in the denominator instead of . Because of this, in small samples, maximum likelihood estimates tend to be biased, meaning that, on average, it tends to underestimate the population variance.

One useful property of maximum likelihood estimation is that the standard error can be approximated by the inverse of the curvature of the likelihood function at the peak. The two graphs below show that with a larger sample, the likelihood function has a higher curvature (i.e., steeper around the peak), which results in a smaller estimated standard error.