# To install a package, run the following ONCE (and only once on your computer)

# install.packages("psych")

library(here) # makes reading data more consistent

library(tidyverse) # for data manipulation and plotting

library(haven) # for importing SPSS/SAS/Stata data

library(lme4) # for multilevel analysis

library(brms) # for Bayesian multilevel analysis

library(cAIC4) # for conditional AIC

library(glmmLasso) # for MLM lasso

library(MuMIn) # for model averaging

library(modelsummary) # for making tablesWeek 14 (R): Prediction

Load Packages and Import Data

The data is the first wave of the Cognition, Health, and Aging Project.

# Download the data from

# https://www.pilesofvariance.com/Chapter8/SPSS/SPSS_Chapter8.zip, and put it

# into the "data_files" folder

zip_data <- here("data_files", "SPSS_Chapter8.zip")

if (!file.exists(zip_data)) {

download.file(

"https://www.pilesofvariance.com/Chapter8/SPSS/SPSS_Chapter8.zip",

zip_data

)

}

stress_data <- read_sav(

unz(zip_data,

"SPSS_Chapter8/SPSS_Chapter8.sav"))

stress_data <- stress_data %>%

# Center mood (originally 1-5) at 1 for interpretation (so it becomes 0-4)

# Also women to factor

mutate(

mood1 = mood - 1,

women = factor(women,

levels = c(0, 1),

labels = c("men", "women")

)

)The data is already in long format. Let’s first do a subsample of 30 participants:

set.seed(1719)

random_persons <- sample(unique(stress_data$PersonID), 30)

stress_sub <- stress_data %>%

filter(PersonID %in% random_persons)# First, separate the time-varying variables into within-person and

# between-person levels

stress_sub <- stress_sub %>%

group_by(PersonID) %>%

# The `across()` function can be used to operate the same procedure on

# multiple variables

mutate(across(

c(symptoms, mood1, stressor),

# The `.` means the variable to be operated on

list(

"pm" = ~ mean(., na.rm = TRUE),

"pmc" = ~ . - mean(., na.rm = TRUE)

)

)) %>%

ungroup()Let’s use the model from a previous week

Level 1:

m1_sub <- brm(

symptoms ~ (mood1_pm + mood1_pmc) * women + (mood1_pmc | PersonID),

data = stress_sub,

seed = 2315,

file = "brms_cached_m1_sub"

)Predictions

# Cluster-specific

(obs1 <- stress_sub[1, c("PersonID", "mood1_pm", "mood1_pmc", "women",

"symptoms")])PersonID <dbl> | mood1_pm <dbl> | mood1_pmc <dbl> | women <fct> | symptoms <dbl> |

|---|---|---|---|---|

| 103 | 0 | 0 | women | 0 |

(pred1 <- predict(m1_sub, newdata = obs1))># Estimate Est.Error Q2.5 Q97.5

># [1,] 0.3066275 0.8235923 -1.319272 1.906006# Unconditional/marginal prediction

predict(m1_sub, newdata = obs1, re_formula = NA)># Estimate Est.Error Q2.5 Q97.5

># [1,] 0.8990274 0.7957485 -0.6645993 2.464147Prediction Error

In the graph below, the 90% predictive intervals are shown in skyblue, whereas the actual data are shown in darkblue. A good statistical model should have good preditive accuracy so that the skyblue dots and the red dots are close; a valid statistical model should have most of the skyblue intervals covering the observed data.

pred_df <- filter(

m1_sub$data,

PersonID %in% sample(unique(m1_sub$data$PersonID), 9)

)

pp_check(m1_sub, type = "intervals_grouped",

group = "PersonID", newdata = pred_df)># Using all posterior draws for ppc type 'intervals_grouped' by default.

Average In-Sample Prediction Error

Let’s consider the prediction error for everyone in the data subset

# Obtain predicted values for everyone

pred_all <- predict(m1_sub, re_formula = NA)

# Now, compute the prediction error

prederr_all <- m1_sub$data$symptoms - pred_all[, "Estimate"]

# Statisticians love to square the prediction error. The mean of the squared

# prediction error is called the mean squared error (MSE)

(mse_m1 <- mean(prederr_all^2))># [1] 1.040123# The square root of MSE, the root mean squared error (RMSE), can be considered

# the average prediction error (marginal)

(rmse_m1 <- sqrt(mse_m1))># [1] 1.019864Let’s now consider a model with more predictors:

# 35 main/interaction effects

m2_sub <- brm(

symptoms ~ (mood1_pm + mood1_pmc) * (stressor_pm + stressor) *

(women + baseage + weekend) +

(mood1_pmc + stressor | PersonID),

data = stress_sub,

seed = 2315,

file = "brms_cached_m2_sub"

)And check the prediction error:

pred_all <- predict(m2_sub, re_formula = NA)prederr_all <- m2_sub$data$symptoms - pred_all[, "Estimate"]

mse_m2 <- mean(prederr_all^2)

tibble(Model = c("M1", "M2"),

`In-sample MSE` = c(mse_m1, mse_m2))Model <chr> | In-sample MSE <dbl> | |||

|---|---|---|---|---|

| M1 | 1.040123 | |||

| M2 | 0.691197 |

You can see that the MSE drops with the more complex model. Does it mean that this more complex model should be preferred?

Out-Of-Sample Prediction Error

The problem of using in-sample prediction error to determine which model should be preferred is that the complex model will capture a lot of the noise in the data, making it not generalizable to other sample. In-sample prediction is not very meaningful, because if we already have the data, our interest is usually not to predict them. Instead, in research, we want models that will generalize to other samples. Therefore, learning all the noise in the sample is not a good idea, and will lead to overfitting—having estimates that are not generalizable to other samples.

To see this, let’s try to use the models we built on the 30 participants to predict symptoms for the remaining 75 participants:

# Get the remaining data

stress_test <- stress_data %>%

# Select participants not included in the previous models

filter(!PersonID %in% random_persons) %>%

# Person-mean centering

group_by(PersonID) %>%

# The `across()` function can be used to operate the same procedure on

# multiple variables

mutate(across(

c(symptoms, mood1, stressor),

# The `.` means the variable to be operated on

list(

"pm" = ~ mean(., na.rm = TRUE),

"pmc" = ~ . - mean(., na.rm = TRUE)

)

)) %>%

ungroup()

# Prediction error from m1

pred_all <- predict(m1_sub, newdata = stress_test, re_formula = NA)

prederr_all <- stress_test$symptoms - pred_all[, "Estimate"]

mse_m1 <- mean(prederr_all^2, na.rm = TRUE)

# Prediction error from m2

pred_all <- predict(m2_sub, newdata = stress_test, re_formula = NA)

prederr_all <- stress_test$symptoms - pred_all[, "Estimate"]

mse_m2 <- mean(prederr_all^2, na.rm = TRUE)

# Print out-of-sample prediction error

tibble(Model = c("M1", "M2"),

`Out-of-sample MSE` = c(mse_m1, mse_m2))Model <chr> | Out-of-sample MSE <dbl> | |||

|---|---|---|---|---|

| M1 | 1.846893 | |||

| M2 | 5.268427 |

As you can see from above, the prediction on data not used for building the model is worse. And m2 makes much worse out-of-sample prediction than m1, because when the sample size is small relative to the size of the model, a complex model is especially prone to overfitting, as it has many parameters that can be used to capitalize on the noise of the data.

As suggested before, we should care about out-of-sample prediction error than in-sample prediction error, so in this case m1 should be preferred. In practice, however, we don’t usually have the luxury of having another sample for us to get out-of-sample prediction error. So what should we do?

Cross-Validation

A simple solution is cross-validation, which is extremely popular in machine learning. The idea is to split the data into two parts, just like what we did above. Then fit the model in one part, and get the prediction error on the other part. The process is repeated

In model fitted using brms, you can use the kfold() option to do lme4::lmer() (because of the speed), which is mainly for you to understand its logic.

# Split the index of 30 participants into 5 parts

num_folds <- 5

random_sets <- split(

random_persons,

rep_len(1:num_folds, length(random_persons))

)

# Initialize the sum of squared prediction errors for each model

sum_prederr_m1 <- sum_prederr_m2 <- 0

# Loop over each set

for (setk in random_sets) {

# Fit model 1 on the subset

fit_m1 <- lmer(

symptoms ~ (mood1_pm + mood1_pmc) * women + (mood1_pmc | PersonID),

data = stress_sub,

# Select specific observations

subset = !PersonID %in% setk

)

# Remaining data

stress_sub_test <- stress_sub %>% filter(PersonID %in% setk)

# Obtain prediction error

pred_m1_test <- predict(fit_m1, newdata = stress_sub_test, re.form = NA)

prederr_m1 <- stress_sub_test$symptoms - pred_m1_test

sum_prederr_m1 <- sum_prederr_m1 + sum(prederr_m1^2, na.rm = TRUE)

# Fit model 2 on the subset

fit_m2 <- lmer(

symptoms ~ (mood1_pm + mood1_pmc) * (stressor_pm + stressor) *

(women + baseage + weekend) +

(mood1_pmc + stressor | PersonID),

data = stress_sub,

# Select specific observations

subset = !PersonID %in% setk

)

# Remaining data

stress_sub_test <- stress_sub %>% filter(PersonID %in% setk)

# Obtain prediction error

pred_m2_test <- predict(fit_m2, newdata = stress_sub_test, re.form = NA)

prederr_m2 <- stress_sub_test$symptoms - pred_m2_test

sum_prederr_m2 <- sum_prederr_m2 + sum(prederr_m2^2, na.rm = TRUE)

}># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')# MSE (dividing the sum by 150 observations)

tibble(Model = c("M1", "M2"),

`5-fold CV MSE` = c(sum_prederr_m1 / 150, sum_prederr_m2 / 150))Model <chr> | 5-fold CV MSE <dbl> | |||

|---|---|---|---|---|

| M1 | 1.176335 | |||

| M2 | 2.949056 |

# The estimated out-of-sample MSE is more than double for m2 than for m1Leave-one-out (LOO) cross validation

A special case of cross-validation is to use brms. So we can do

brms::loo(m1_sub, m2_sub)># Warning: Found 4 observations with a pareto_k > 0.7 in model 'm1_sub'. It is

># recommended to set 'moment_match = TRUE' in order to perform moment matching for

># problematic observations.># Warning: Found 16 observations with a pareto_k > 0.7 in model 'm2_sub'. It is

># recommended to set 'moment_match = TRUE' in order to perform moment matching for

># problematic observations.># Output of model 'm1_sub':

>#

># Computed from 4000 by 149 log-likelihood matrix

>#

># Estimate SE

># elpd_loo -188.9 16.1

># p_loo 31.7 6.6

># looic 377.8 32.2

># ------

># Monte Carlo SE of elpd_loo is NA.

>#

># Pareto k diagnostic values:

># Count Pct. Min. n_eff

># (-Inf, 0.5] (good) 141 94.6% 1087

># (0.5, 0.7] (ok) 4 2.7% 342

># (0.7, 1] (bad) 4 2.7% 14

># (1, Inf) (very bad) 0 0.0% <NA>

># See help('pareto-k-diagnostic') for details.

>#

># Output of model 'm2_sub':

>#

># Computed from 4000 by 149 log-likelihood matrix

>#

># Estimate SE

># elpd_loo -203.4 14.7

># p_loo 51.9 7.8

># looic 406.8 29.5

># ------

># Monte Carlo SE of elpd_loo is NA.

>#

># Pareto k diagnostic values:

># Count Pct. Min. n_eff

># (-Inf, 0.5] (good) 97 65.1% 812

># (0.5, 0.7] (ok) 36 24.2% 145

># (0.7, 1] (bad) 15 10.1% 12

># (1, Inf) (very bad) 1 0.7% 10

># See help('pareto-k-diagnostic') for details.

>#

># Model comparisons:

># elpd_diff se_diff

># m1_sub 0.0 0.0

># m2_sub -14.5 6.7which again suggested m1 is expected to have less out-of-sample prediction error. The p_loo is an estimate of the number of effective parameters in a model, which suggested that m2 is a more complex model than m1.

A Remark on Cross-Validation for Hierarchical Data

With hierarchical data, cross-validation can be done at different levels. What we did before was to split the sample of clusters, i.e., CV at the top level (i.e., level 2, or person). This kind of CV is most relevant when one is interested in prediction at the cluster level. Another way to do CV is to split the data within clusters. For example, here each person has five time points max, so we can use four time points to build model to predict the remaining time point.

# Add time to the data

stress_sub <- stress_sub %>%

group_by(PersonID) %>%

mutate(time = row_number())

# Initialize the sum of squared prediction errors for each model

sum_prederr_m1 <- sum_prederr_m2 <- 0

# Loop over each person

for (t in 1:5) {

# Fit model 1 on the data without personk

fit_m1 <- lmer(

symptoms ~ (mood1_pm + mood1_pmc) * women + (mood1_pmc | PersonID),

data = stress_sub,

# Select specific observations

subset = time != t

)

# Data for personk

stress_sub_test <- stress_sub %>% filter(time == t)

# Obtain prediction error

pred_m1_test <- predict(fit_m1, newdata = stress_sub_test, re.form = NA)

prederr_m1 <- stress_sub_test$symptoms - pred_m1_test

sum_prederr_m1 <- sum_prederr_m1 + sum(prederr_m1^2, na.rm = TRUE)

# Fit model 2 on the subset

fit_m2 <- lmer(

symptoms ~ (mood1_pm + mood1_pmc) * (stressor_pm + stressor) *

(women + baseage + weekend) +

(mood1_pmc + stressor | PersonID),

data = stress_sub,

# Select specific observations

subset = time != t

)

# Data for personk

stress_sub_test <- stress_sub %>% filter(time == t)

# Obtain prediction error

pred_m2_test <- predict(fit_m2, newdata = stress_sub_test, re.form = NA)

prederr_m2 <- stress_sub_test$symptoms - pred_m2_test

sum_prederr_m2 <- sum_prederr_m2 + sum(prederr_m2^2, na.rm = TRUE)

}># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')# MSE (dividing the sum by 150 observations)

tibble(

Model = c("M1", "M2"),

`5-fold Within-Cluster CV MSE` =

c(sum_prederr_m1 / 150, sum_prederr_m2 / 150)

)Model <chr> | 5-fold Within-Cluster CV MSE <dbl> | ||

|---|---|---|---|

| M1 | 1.088516 | ||

| M2 | 1.396415 |

Here, M1 is still better, but the difference is smaller. For more discussion on the difference, please check out this blog post, or the paper “Conditional Akaike information for mixed-effects models”.

Information Criteria

A closely-related way to estimate the out-of-sample prediction is to use information criteria, which is based on information theory. Simply speaking, these are measures of the expected out-of-sample prediction error under certain assumptions (e.g., normality). The most famous one is the Akaike information criterion (AIC), named after statistician Hirotugu Akaike, who derived that under certain assumptions, the expected prediction error is the deviance plus two times the number of parameters in the model. We can obtain AICs using the generic AIC() function for cluster-level predictions, and the cAIC4::cAIC() function for individual-level predictions.

# Refit with lme4 as glmmTMB did not converge

fit_m1 <- lmer(symptoms ~ (mood1_pm + mood1_pmc) * women +

(mood1_pmc | PersonID),

data = stress_sub)># boundary (singular) fit: see help('isSingular')fit_m2 <- lmer(symptoms ~ (mood1_pm + mood1_pmc) * (stressor_pm + stressor) *

(women + baseage + weekend) +

(mood1_pmc + stressor | PersonID),

data = stress_sub)># boundary (singular) fit: see help('isSingular')# Cluster-level prediction

AIC(fit_m1, fit_m2)df <dbl> | AIC <dbl> | |||

|---|---|---|---|---|

| fit_m1 | 10 | 399.4346 | ||

| fit_m2 | 43 | 399.9684 |

# Individual-level prediction

rbind(

m1 = cAIC(fit_m1)[c("df", "caic")],

m2 = cAIC(fit_m2)[c("df", "caic")]

)># boundary (singular) fit: see help('isSingular')># df caic

># m1 29.06952 367.2822

># m2 51.5599 388.6662Because AIC approximates the out-of-sample prediction error (for continuous, normal outcomes), a model with a lower AIC should be preferred. Here it shows m1 is better for individual-level prediction.

To avoid overfitting, we should compare models based on the out-of-sample prediction errors, which can be approxiamted by preferring models with lower AICs.

How about the BIC?

As a side note, AIC is commonly presented alongside BIC, the Bayesian information criterion (BIC). However, BIC was developed with very different motivations, and technically it is not an information criterion (and it is debatable whether it is Bayesian). However, it can be used in a similar way, where models showing lower BICs represent better fit. It tends to prefer models that are more parsimonious than the AIC.

Model Comparisons

Let’s compare several models:

mood1andstressor, no random slopesmood1_pm,mood1_pmc,stressor_pm,stressor, no random slopesmood1_pm,mood1_pmc,stressor_pm,stressor, random slopes- Model 3 + interaction terms:

mood1_pm:stressor_pm,mood1_pmc:stressor

m_1 <- lmer(

symptoms ~ mood1 + stressor + (1 | PersonID),

data = stress_sub, REML = FALSE

)

m_2 <- lmer(

symptoms ~ mood1_pm + mood1_pmc + stressor_pm + stressor +

(1 | PersonID),

data = stress_sub, REML = FALSE

)

m_3 <- lmer(

symptoms ~ mood1_pm + mood1_pmc + stressor_pm + stressor +

(mood1_pmc + stressor | PersonID),

data = stress_sub, REML = FALSE

)># boundary (singular) fit: see help('isSingular')m_4 <- lmer(

symptoms ~ mood1_pm * stressor + mood1_pmc * stressor +

(mood1_pmc + stressor | PersonID),

data = stress_sub, REML = FALSE

)># boundary (singular) fit: see help('isSingular')# Marginal AIC

AIC(m_1, m_2, m_3, m_4) # m_2 is the bestdf <dbl> | AIC <dbl> | |||

|---|---|---|---|---|

| m_1 | 5 | 404.2322 | ||

| m_2 | 7 | 403.5750 | ||

| m_3 | 12 | 410.2415 | ||

| m_4 | 13 | 414.3175 |

# Conditional AIC

rbind(

m_1 = cAIC(m_1)[c("df", "caic")],

m_2 = cAIC(m_2)[c("df", "caic")],

m_3 = cAIC(m_3)[c("df", "caic")],

m_4 = cAIC(m_4)[c("df", "caic")]

) # m_1, m_2, m_3 similar># df caic

># m_1 28.93959 369.3262

># m_2 28.75855 369.2557

># m_3 28.75855 369.2557

># m_4 30.80038 373.5176Overall, it seems (1) and (2) are best for predictions, with similar AICs.

Bonus: Model Averaging

# Use full data with centering

stress_data <- stress_data %>%

group_by(PersonID) %>%

# The `across()` function can be used to operate the same procedure on

# multiple variables

mutate(across(

c(symptoms, mood1, stressor),

# The `.` means the variable to be operated on

list(

"pm" = ~ mean(., na.rm = TRUE),

"pmc" = ~ . - mean(., na.rm = TRUE)

)

)) %>%

ungroup()# Require data without missing

stress_lm <- drop_na(stress_data)

m_full <- lmer(

symptoms ~ mood1_pm + mood1_pmc + stressor_pm + stressor +

women + baseage + weekend +

(mood1_pmc + stressor | PersonID),

na.action = "na.fail",

data = stress_lm,

REML = FALSE

)># boundary (singular) fit: see help('isSingular')# Fit all models with mood1_pm + mood1_pmc + stressor_pm + stressor, and

# compare marginal AIC

(dd_maic <- dredge(m_full,

fixed = ~ mood1_pm + mood1_pmc + stressor_pm + stressor,

rank = "AIC" # using marginal AIC

))># Fixed terms are "mood1_pm", "mood1_pmc", "stressor", "stressor_pm" and "(Intercept)"

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')># Warning in checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, :

># Model failed to converge with max|grad| = 0.01251 (tol = 0.002, component 1)># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')(Intercept) <dbl> | baseage <dbl> | mood1_pm <dbl> | mood1_pmc <dbl> | stressor <dbl> | ||

|---|---|---|---|---|---|---|

| 5 | 0.7357486 | NA | 1.841885 | 0.1215715 | 0.06793985 | |

| 1 | 0.4808882 | NA | 1.835174 | 0.1201676 | 0.06323212 | |

| 7 | 0.7580768 | NA | 1.847592 | 0.1285254 | 0.06200181 | |

| 6 | 0.9237537 | -0.002390708 | 1.857378 | 0.1223139 | 0.06764113 | |

| 3 | 0.5043704 | NA | 1.841540 | 0.1272051 | 0.05712722 | |

| 2 | 0.8610971 | -0.004779596 | 1.866309 | 0.1212418 | 0.06271041 | |

| 8 | 0.9212393 | -0.002079326 | 1.860923 | 0.1290436 | 0.06178816 | |

| 4 | 0.8560156 | -0.004425608 | 1.870275 | 0.1281590 | 0.05675211 |

summary(model.avg(dd_maic))>#

># Call:

># model.avg(object = dd_maic)

>#

># Component model call:

># lmer(formula = symptoms ~ <8 unique rhs>, data = stress_lm, REML =

># FALSE, na.action = na.fail)

>#

># Component models:

># df logLik AIC delta weight

># 23457 13 -701.83 1429.65 0.00 0.30

># 2345 12 -703.20 1430.40 0.75 0.20

># 234567 14 -701.63 1431.26 1.60 0.13

># 123457 14 -701.81 1431.63 1.97 0.11

># 23456 13 -702.99 1431.98 2.32 0.09

># 12345 13 -703.15 1432.30 2.64 0.08

># 1234567 15 -701.62 1433.24 3.58 0.05

># 123456 14 -702.94 1433.89 4.23 0.04

>#

># Term codes:

># baseage mood1_pm mood1_pmc stressor stressor_pm weekend

># 1 2 3 4 5 6

># women

># 7

>#

># Model-averaged coefficients:

># (full average)

># Estimate Std. Error Adjusted SE z value Pr(>|z|)

># (Intercept) 0.7093827 0.6571310 0.6586361 1.077 0.28146

># womenwomen -0.1963555 0.2226084 0.2228554 0.881 0.37827

># mood1_pm 1.8468342 0.3714699 0.3723709 4.960 7e-07 ***

># mood1_pmc 0.1233922 0.1400432 0.1403830 0.879 0.37942

># stressor 0.0640387 0.1002116 0.1004545 0.637 0.52381

># stressor_pm 0.8980961 0.3023394 0.3030718 2.963 0.00304 **

># weekend -0.0145762 0.0459695 0.0460563 0.316 0.75163

># baseage -0.0009017 0.0079126 0.0079311 0.114 0.90948

>#

># (conditional average)

># Estimate Std. Error Adjusted SE z value Pr(>|z|)

># (Intercept) 0.709383 0.657131 0.658636 1.077 0.28146

># womenwomen -0.333532 0.196013 0.196489 1.697 0.08961 .

># mood1_pm 1.846834 0.371470 0.372371 4.960 7e-07 ***

># mood1_pmc 0.123392 0.140043 0.140383 0.879 0.37942

># stressor 0.064039 0.100212 0.100455 0.637 0.52381

># stressor_pm 0.898096 0.302339 0.303072 2.963 0.00304 **

># weekend -0.046903 0.072688 0.072865 0.644 0.51977

># baseage -0.003287 0.014845 0.014881 0.221 0.82519

># ---

># Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1# Variable importance

sw(dd_maic)># mood1_pm mood1_pmc stressor stressor_pm women weekend

># Sum of weights: 1.00 1.00 1.00 1.00 0.59 0.31

># N containing models: 8 8 8 8 4 4

># baseage

># Sum of weights: 0.27

># N containing models: 4# Fit all models with mood1_pm + mood1_pmc + stressor_pm + stressor, and

# compare conditional AIC

(dd_caic <- dredge(m_full,

fixed = ~ mood1_pm + mood1_pmc + stressor_pm + stressor,

rank = function(x) cAIC4::cAIC(x)$caic # using conditional AIC

))># Fixed terms are "mood1_pm", "mood1_pmc", "stressor", "stressor_pm" and "(Intercept)"

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')># Warning in checkConv(attr(opt, "derivs"), opt$par, ctrl = control$checkConv, :

># Model failed to converge with max|grad| = 0.01251 (tol = 0.002, component 1)># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')

># boundary (singular) fit: see help('isSingular')(Intercept) <dbl> | baseage <dbl> | mood1_pm <dbl> | mood1_pmc <dbl> | stressor <dbl> | ||

|---|---|---|---|---|---|---|

| 3 | 0.5043704 | NA | 1.841540 | 0.1272051 | 0.05712722 | |

| 4 | 0.8560156 | -0.004425608 | 1.870275 | 0.1281590 | 0.05675211 | |

| 7 | 0.7580768 | NA | 1.847592 | 0.1285254 | 0.06200181 | |

| 1 | 0.4808882 | NA | 1.835174 | 0.1201676 | 0.06323212 | |

| 2 | 0.8610971 | -0.004779596 | 1.866309 | 0.1212418 | 0.06271041 | |

| 5 | 0.7357486 | NA | 1.841885 | 0.1215715 | 0.06793985 | |

| 6 | 0.9237537 | -0.002390708 | 1.857378 | 0.1223139 | 0.06764113 | |

| 8 | 0.9212393 | -0.002079326 | 1.860923 | 0.1290436 | 0.06178816 |

summary(model.avg(dd_caic))>#

># Call:

># model.avg(object = dd_caic)

>#

># Component model call:

># lmer(formula = symptoms ~ <8 unique rhs>, data = stress_lm, REML =

># FALSE, na.action = na.fail)

>#

># Component models:

># df logLik IC delta weight

># 23456 13 -702.99 1312.95 0.00 0.30

># 123456 14 -702.94 1313.22 0.27 0.26

># 234567 14 -701.63 1313.32 0.37 0.25

># 2345 12 -703.20 1316.04 3.09 0.06

># 12345 13 -703.15 1316.54 3.59 0.05

># 23457 13 -701.83 1317.02 4.08 0.04

># 123457 14 -701.81 1317.47 4.52 0.03

># 1234567 15 -701.62 1318.82 5.87 0.02

>#

># Term codes:

># baseage mood1_pm mood1_pmc stressor stressor_pm weekend

># 1 2 3 4 5 6

># women

># 7

>#

># Model-averaged coefficients:

># (full average)

># Estimate Std. Error Adjusted SE z value Pr(>|z|)

># (Intercept) 0.702488 0.736992 0.738701 0.951 0.34162

># weekend -0.038577 0.068216 0.068370 0.564 0.57259

># mood1_pm 1.852095 0.373702 0.374609 4.944 8e-07 ***

># mood1_pmc 0.126697 0.140143 0.140483 0.902 0.36713

># stressor 0.059710 0.100592 0.100836 0.592 0.55375

># stressor_pm 0.907331 0.303253 0.303988 2.985 0.00284 **

># baseage -0.001488 0.009063 0.009084 0.164 0.86991

># womenwomen -0.110640 0.193378 0.193538 0.572 0.56754

>#

># (conditional average)

># Estimate Std. Error Adjusted SE z value Pr(>|z|)

># (Intercept) 0.702488 0.736992 0.738701 0.951 0.34162

># weekend -0.047174 0.072697 0.072874 0.647 0.51741

># mood1_pm 1.852095 0.373702 0.374609 4.944 8e-07 ***

># mood1_pmc 0.126697 0.140143 0.140483 0.902 0.36713

># stressor 0.059710 0.100592 0.100836 0.592 0.55375

># stressor_pm 0.907331 0.303253 0.303988 2.985 0.00284 **

># baseage -0.004193 0.014838 0.014874 0.282 0.77800

># womenwomen -0.333482 0.195956 0.196433 1.698 0.08957 .

># ---

># Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1# Variable importance

sw(dd_caic)># mood1_pm mood1_pmc stressor stressor_pm weekend baseage

># Sum of weights: 1.00 1.00 1.00 1.00 0.82 0.35

># N containing models: 8 8 8 8 4 4

># women

># Sum of weights: 0.33

># N containing models: 4Bonus: Lasso (Least Absolute Shrinkage and Selection Operator)

The idea of Lasso, or more generally regularization, is that complex models tend to overfit in small samples and when the number of predictors is large. On the other hand, simple models, which means that when all predictors are assumed either not predictive or weakly predictive of the outcome, tend to underfit. Therefore, one can find the right fit by having the estimates to be somewhere between 0 and the maximum likelihood estimates. This is achieved in Lasso by adding a penalty in the minimization algorithm, usually called lambda, with larger lambda corresponding to a larger penalty.

Here is an example. Note that generally one standardizes the data so that the variables have standard deviations of 1s. It is, however, not discussed much in the literature whether this applies to cluster means and cluster mean centered variables for multilevel analysis. In the example below, I only standardize the raw variables.

stress_data <- read_sav(

unz(zip_data,

"SPSS_Chapter8/SPSS_Chapter8.sav")

)

stress_std <- stress_data %>%

mutate(across(

c(women, baseage, weekend, symptoms, mood, stressor),

~ (. - mean(., na.rm = TRUE)) / sd(., na.rm = TRUE)

))

# Subsample

stress_std <- stress_std %>%

drop_na() %>%

# filter(PersonID %in% random_persons) %>%

group_by(PersonID) %>%

# The `across()` function can be used to operate the same procedure on

# multiple variables

mutate(across(

c(symptoms, mood, stressor),

# The `.` means the variable to be operated on

list(

"pm" = ~ mean(., na.rm = TRUE),

"pmc" = ~ . - mean(., na.rm = TRUE)

)

)) %>%

ungroup() %>%

mutate(PersonID = as_factor(PersonID))# Maximum likelihood estimates

m2_full <- lmer(

symptoms ~ (mood_pm + mood_pmc) * (stressor_pm + stressor) *

(women + baseage + weekend) +

(mood_pmc + stressor | PersonID),

data = stress_std,

REML = FALSE

)># boundary (singular) fit: see help('isSingular')# Lasso with lambda = 19

# First obtain the predictor matrix

pred_mat <- model.matrix(

symptoms ~ (mood_pm + mood_pmc + stressor_pm + stressor) +

(women + baseage + weekend), data = stress_std)

m2_penalized <- glmmLasso(

# First argument with fixed effect; it does not support the `*` notation

symptoms ~ mood_pm + mood_pmc + stressor_pm + stressor +

women + baseage + weekend +

(mood_pm + mood_pmc):(stressor_pm + stressor) +

(mood_pm + mood_pmc):(women + baseage + weekend) +

(stressor_pm + stressor):(women + baseage + weekend) +

(mood_pm + mood_pmc):(stressor_pm + stressor):(women + baseage + weekend),

# Random effects

rnd = list(PersonID = ~ mood_pmc + stressor),

data = stress_std,

lambda = 20,

final.re = TRUE

)># Warning in est.glmmLasso.RE(fix = fix, rnd = rnd, data = data, lambda =

># lambda, : Random slopes are not standardized back!# Find lambda with lowest AIC

# lambda_grid <- seq(0, to = 100, by = 1)

# aic_grid <- rep(NA, length = length(lambda_grid))

# for (i in seq_along(lambda_grid)) {

# m2_penalized <- glmmLasso(

# # First argument with fixed effect; it does not support the `*` notation

# symptoms ~ mood_pm + mood_pmc + stressor_pm + stressor +

# women + baseage + weekend +

# (mood_pm + mood_pmc):(stressor_pm + stressor) +

# (mood_pm + mood_pmc):(women + baseage + weekend) +

# (stressor_pm + stressor):(women + baseage + weekend) +

# (mood_pm + mood_pmc):(stressor_pm + stressor):(women + baseage + weekend),

# # Random effects

# rnd = list(PersonID = ~ mood_pmc + stressor),

# data = stress_std,

# lambda = lambda_grid[i]

# )

# aic_grid[i] <- m2_penalized$aic

# }

# Compare the fixed effects

round(cbind("Full Model" = fixef(m2_full),

"Lasso" = m2_penalized$coefficients), 2)># Full Model Lasso

># (Intercept) 0.07 0.04

># mood_pm 0.63 0.60

># mood_pmc 0.02 0.00

># stressor_pm 0.31 0.33

># stressor 0.04 0.04

># women -0.23 -0.15

># baseage -0.01 0.00

># weekend -0.01 0.00

># mood_pm:stressor_pm -0.30 -0.12

># mood_pm:stressor -0.01 0.00

># mood_pmc:stressor_pm 0.02 0.00

># mood_pmc:stressor 0.01 0.04

># mood_pm:women -0.31 -0.15

># mood_pm:baseage -0.04 -0.06

># mood_pm:weekend -0.02 0.00

># mood_pmc:women 0.04 0.00

># mood_pmc:baseage 0.07 0.00

># mood_pmc:weekend 0.08 0.08

># stressor_pm:women -0.09 -0.16

># stressor_pm:baseage 0.03 0.02

># stressor_pm:weekend 0.10 0.00

># stressor:women -0.06 -0.04

># stressor:baseage 0.01 0.00

># stressor:weekend -0.06 0.00

># mood_pm:stressor_pm:women 0.53 0.00

># mood_pm:stressor_pm:baseage 0.05 0.06

># mood_pm:stressor_pm:weekend 0.10 0.00

># mood_pm:stressor:women -0.06 0.00

># mood_pm:stressor:baseage -0.11 -0.12

># mood_pm:stressor:weekend -0.13 -0.05

># mood_pmc:stressor_pm:women 0.03 0.00

># mood_pmc:stressor_pm:baseage -0.08 0.00

># mood_pmc:stressor_pm:weekend 0.21 0.23

># mood_pmc:stressor:women -0.01 0.00

># mood_pmc:stressor:baseage 0.02 0.00

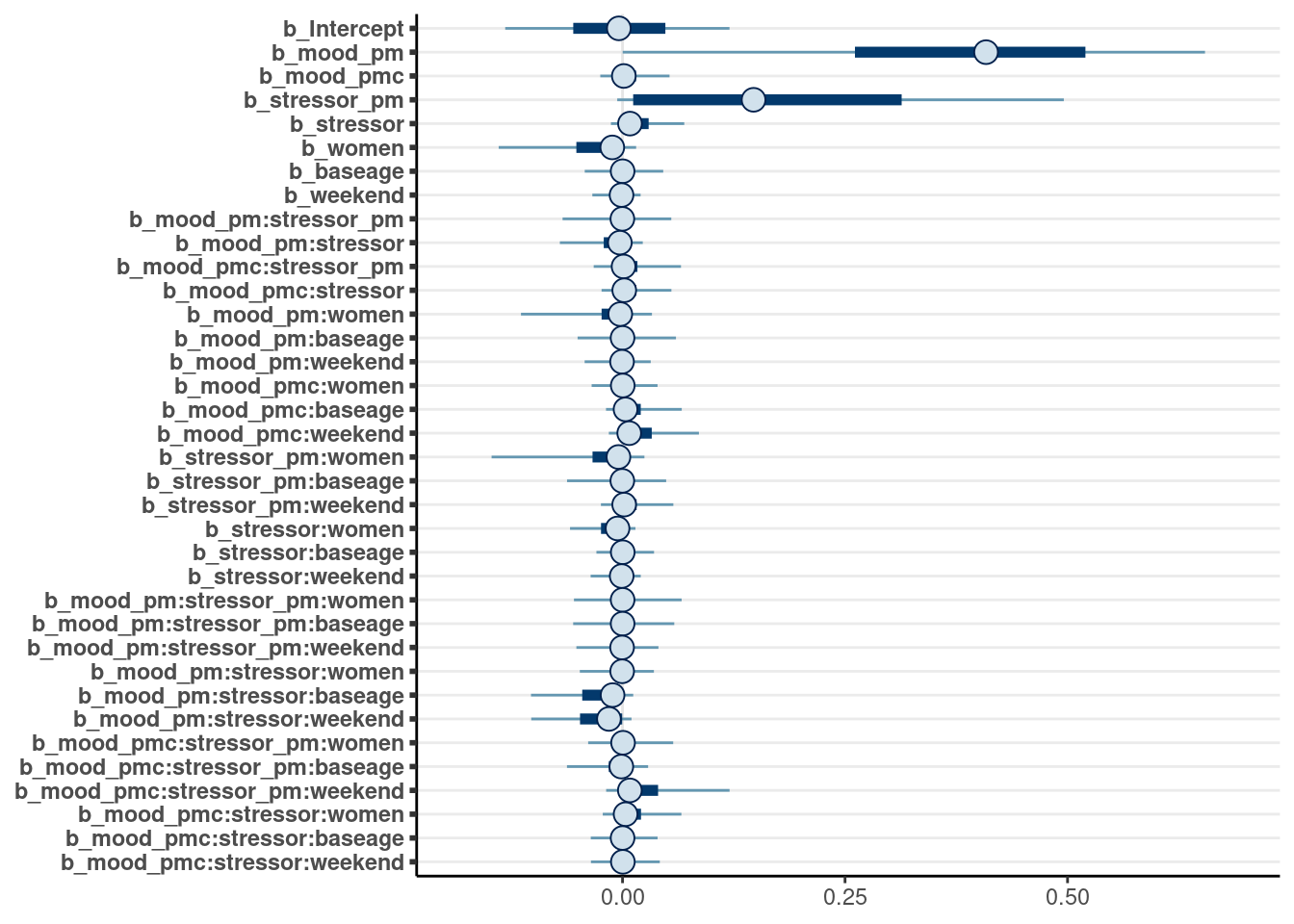

># mood_pmc:stressor:weekend -0.11 -0.10As can be seen, many of the coefficients have shrunken with Lasso. The variables that seem most useful in predicting symptoms are mood_pm, stressor_pm, women, mood_pm:stressor_pm, mood_pm:women, stressor_pm:women, mood_pm:stressor:baseage, mood_pmc:stressor_pm:weekend. The lambda value can be chosen using AIC or using cross-validation.

Bonus: Regularizing Prior

Hierarchical Shrinkage

This is a special type of prior that adaptively reguarlizes coefficients that are weakly supported by the data. To learn more, see the paper by Piironen & Vehtari (2017, doi: 10.1214/17-EJS1337SI). The following requires a relatively long running time as it requires the MCMC algorithm to run more slowly (with adapt_delta = .995).

m2_hs <- brm(

symptoms ~ (mood_pm + mood_pmc) * (stressor_pm + stressor) *

(women + baseage + weekend) + (mood_pmc * stressor | PersonID),

control = list(adapt_delta = .995, max_treedepth = 12),

data = stress_std, seed = 2152,

prior = prior(horseshoe(df = 3, par_ratio = 0.1), class = b),

file = "brms_cached_m2_hs"

)mcmc_plot(m2_hs, variable = "^b_", regex = TRUE)